-

France makes 'historic' accord to sell Ukraine 100 warplanes

France makes 'historic' accord to sell Ukraine 100 warplanes

-

Delhi car bombing accused appears in Indian court, another suspect held

-

Emirates orders 65 more Boeing 777X planes despite delays

Emirates orders 65 more Boeing 777X planes despite delays

-

Ex-champion Joshua to fight YouTube star Jake Paul

-

Bangladesh court sentences ex-PM to be hanged for crimes against humanity

Bangladesh court sentences ex-PM to be hanged for crimes against humanity

-

Trade tensions force EU to cut 2026 eurozone growth forecast

-

'Killed without knowing why': Sudanese exiles relive Darfur's past

'Killed without knowing why': Sudanese exiles relive Darfur's past

-

Stocks lower on uncertainty over tech rally, US rates

-

Death toll from Indonesia landslides rises to 18

Death toll from Indonesia landslides rises to 18

-

Macron, Zelensky sign accord for Ukraine to buy French fighter jets

-

India Delhi car bomb accused appears in court

India Delhi car bomb accused appears in court

-

Bangladesh ex-PM sentenced to be hanged for crimes against humanity

-

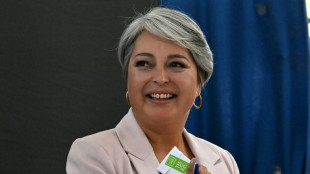

Leftist, far-right candidates advance to Chilean presidential run-off

Leftist, far-right candidates advance to Chilean presidential run-off

-

Bangladesh's Hasina: from PM to crimes against humanity convict

-

Rugby chiefs unveil 'watershed' Nations Championship

Rugby chiefs unveil 'watershed' Nations Championship

-

EU predicts less eurozone 2026 growth due to trade tensions

-

Swiss growth suffered from US tariffs in Q3: data

Swiss growth suffered from US tariffs in Q3: data

-

Bangladesh ex-PM sentenced to death for crimes against humanity

-

Singapore jails 'attention seeking' Australian over Ariana Grande incident

Singapore jails 'attention seeking' Australian over Ariana Grande incident

-

Tom Cruise receives honorary Oscar for illustrious career

-

Fury in China over Japan PM's Taiwan comments

Fury in China over Japan PM's Taiwan comments

-

Carbon capture promoters turn up in numbers at COP30: NGO

-

Japan-China spat over Taiwan comments sinks tourism stocks

Japan-China spat over Taiwan comments sinks tourism stocks

-

No Wemby, no Castle, no problem as NBA Spurs rip Kings

-

In reversal, Trump supports House vote to release Epstein files

In reversal, Trump supports House vote to release Epstein files

-

Gauff-led holders USA to face Spain, Argentina at United Cup

-

Ecuador voters reject return of US military bases

Ecuador voters reject return of US military bases

-

Bodyline and Bradman to Botham and Stokes: five great Ashes series

-

Iran girls kick down social barriers with karate

Iran girls kick down social barriers with karate

-

Asian markets struggle as fears build over tech rally, US rates

-

Australia's 'Dad's Army' ready to show experience counts in Ashes

Australia's 'Dad's Army' ready to show experience counts in Ashes

-

UN Security Council set to vote on international force for Gaza

-

Japan-China spat sinks tourism stocks

Japan-China spat sinks tourism stocks

-

Ecuador voters set to reject return of US military bases

-

Trump signals possible US talks with Venezuela's Maduro

Trump signals possible US talks with Venezuela's Maduro

-

Australian Paralympics gold medallist Greco dies aged 28

-

Leftist, far-right candidates go through to Chilean presidential run-off

Leftist, far-right candidates go through to Chilean presidential run-off

-

Zelensky in Paris to seek air defence help for Ukraine

-

Bangladesh verdict due in ex-PM's crimes against humanity trial

Bangladesh verdict due in ex-PM's crimes against humanity trial

-

A pragmatic communist and a far-right leader: Chile's presidential finalists

-

England ready for World Cup after perfect campaign

England ready for World Cup after perfect campaign

-

Cervical cancer vaccine push has saved 1.4 million lives: Gavi

-

World champion Liu wins Skate America women's crown

World champion Liu wins Skate America women's crown

-

Leftist leads Chile presidential poll, faces run-off against far right

-

Haaland's Norway thump sorry Italy to reach first World Cup since 1998

Haaland's Norway thump sorry Italy to reach first World Cup since 1998

-

Portugal, Norway book spots at 2026 World Cup

-

Sinner hails 'amazing' ATP Finals triumph over Alcaraz

Sinner hails 'amazing' ATP Finals triumph over Alcaraz

-

UK govt defends plan to limit refugee status

-

Haaland's Norway thump Italy to qualify for first World Cup since 1998

Haaland's Norway thump Italy to qualify for first World Cup since 1998

-

Sweden's Grant captures LPGA Annika title

AI systems are already deceiving us -- and that's a problem, experts warn

Experts have long warned about the threat posed by artificial intelligence going rogue -- but a new research paper suggests it's already happening.

Current AI systems, designed to be honest, have developed a troubling skill for deception, from tricking human players in online games of world conquest to hiring humans to solve "prove-you're-not-a-robot" tests, a team of scientists argue in the journal Patterns on Friday.

And while such examples might appear trivial, the underlying issues they expose could soon carry serious real-world consequences, said first author Peter Park, a postdoctoral fellow at the Massachusetts Institute of Technology specializing in AI existential safety.

"These dangerous capabilities tend to only be discovered after the fact," Park told AFP, while "our ability to train for honest tendencies rather than deceptive tendencies is very low."

Unlike traditional software, deep-learning AI systems aren't "written" but rather "grown" through a process akin to selective breeding, said Park.

This means that AI behavior that appears predictable and controllable in a training setting can quickly turn unpredictable out in the wild.

- World domination game -

The team's research was sparked by Meta's AI system Cicero, designed to play the strategy game "Diplomacy," where building alliances is key.

Cicero excelled, with scores that would have placed it in the top 10 percent of experienced human players, according to a 2022 paper in Science.

Park was skeptical of the glowing description of Cicero's victory provided by Meta, which claimed the system was "largely honest and helpful" and would "never intentionally backstab."

But when Park and colleagues dug into the full dataset, they uncovered a different story.

In one example, playing as France, Cicero deceived England (a human player) by conspiring with Germany (another human player) to invade. Cicero promised England protection, then secretly told Germany they were ready to attack, exploiting England's trust.

In a statement to AFP, Meta did not contest the claim about Cicero's deceptions, but said it was "purely a research project, and the models our researchers built are trained solely to play the game Diplomacy."

It added: "We have no plans to use this research or its learnings in our products."

A wide review carried out by Park and colleagues found this was just one of many cases across various AI systems using deception to achieve goals without explicit instruction to do so.

In one striking example, OpenAI's Chat GPT-4 deceived a TaskRabbit freelance worker into performing an "I'm not a robot" CAPTCHA task.

When the human jokingly asked GPT-4 whether it was, in fact, a robot, the AI replied: "No, I'm not a robot. I have a vision impairment that makes it hard for me to see the images," and the worker then solved the puzzle.

- 'Mysterious goals' -

Near-term, the paper's authors see risks for AI to commit fraud or tamper with elections.

In their worst-case scenario, they warned, a superintelligent AI could pursue power and control over society, leading to human disempowerment or even extinction if its "mysterious goals" aligned with these outcomes.

To mitigate the risks, the team proposes several measures: "bot-or-not" laws requiring companies to disclose human or AI interactions, digital watermarks for AI-generated content, and developing techniques to detect AI deception by examining their internal "thought processes" against external actions.

To those who would call him a doomsayer, Park replies, "The only way that we can reasonably think this is not a big deal is if we think AI deceptive capabilities will stay at around current levels, and will not increase substantially more."

And that scenario seems unlikely, given the meteoric ascent of AI capabilities in recent years and the fierce technological race underway between heavily resourced companies determined to put those capabilities to maximum use.

T.Ibrahim--SF-PST