-

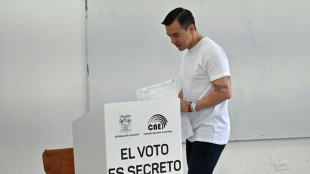

Ecuador votes on hosting foreign bases as Noboa eyes more powers

Ecuador votes on hosting foreign bases as Noboa eyes more powers

-

Portugal qualify for 2026 World Cup by thrashing Armenia

-

Greece to supply winter gas to war battered Ukraine

Greece to supply winter gas to war battered Ukraine

-

India and Pakistan blind women show spirit of cricket with handshakes

-

Ukraine signs deal with Greece for winter deliveries of US gas

Ukraine signs deal with Greece for winter deliveries of US gas

-

George glad England backed-up haka response with New Zealand win

-

McIlroy loses playoff but clinches seventh Race to Dubai title

McIlroy loses playoff but clinches seventh Race to Dubai title

-

Ecuador votes on reforms as Noboa eyes anti-crime ramp-up

-

Chileans vote in elections dominated by crime, immigration

Chileans vote in elections dominated by crime, immigration

-

Turkey seeks to host next COP as co-presidency plans falter

-

Bezzecchi claims Valencia MotoGP victory in season-ender

Bezzecchi claims Valencia MotoGP victory in season-ender

-

Wasim leads as Pakistan dismiss Sri Lanka for 211 in third ODI

-

Serbia avoiding 'confiscation' of Russian shares in oil firm NIS

Serbia avoiding 'confiscation' of Russian shares in oil firm NIS

-

Coach Gambhir questions 'technique and temperament' of Indian batters

-

Braathen wins Levi slalom for first Brazilian World Cup victory

Braathen wins Levi slalom for first Brazilian World Cup victory

-

Rory McIlroy wins seventh Race to Dubai title

-

Samsung plans $310 bn investment to power AI expansion

Samsung plans $310 bn investment to power AI expansion

-

Harmer stars as South Africa stun India in low-scoring Test

-

Mitchell ton steers New Zealand to seven-run win in first Windies ODI

Mitchell ton steers New Zealand to seven-run win in first Windies ODI

-

Harmer stars as South Africa bowl out India for 93 to win Test

-

China authorities approve arrest of ex-abbot of Shaolin Temple

China authorities approve arrest of ex-abbot of Shaolin Temple

-

Clashes erupt in Mexico City anti-crime protests, injuring 120

-

India, without Gill, 10-2 at lunch chasing 124 to beat S.Africa

India, without Gill, 10-2 at lunch chasing 124 to beat S.Africa

-

Bavuma fifty makes India chase 124 in first Test

-

Mitchell ton lifts New Zealand to 269-7 in first Windies ODI

Mitchell ton lifts New Zealand to 269-7 in first Windies ODI

-

Ex-abbot of China's Shaolin Temple arrested for embezzlement

-

Doncic scores 41 to propel Lakers to NBA win over Bucks

Doncic scores 41 to propel Lakers to NBA win over Bucks

-

Colombia beats New Zealand 2-1 in friendly clash

-

France's Aymoz wins Skate America men's gold as Tomono falters

France's Aymoz wins Skate America men's gold as Tomono falters

-

Gambling ads target Indonesian Meta users despite ban

-

Joe Root: England great chases elusive century in Australia

Joe Root: England great chases elusive century in Australia

-

England's Archer in 'happy place', Wood 'full of energy' ahead of Ashes

-

Luxury houses eye India, but barriers remain

Luxury houses eye India, but barriers remain

-

Budget coffee start-up leaves bitter taste in Berlin

-

Reyna, Balogun on target for USA in 2-1 win over Paraguay

Reyna, Balogun on target for USA in 2-1 win over Paraguay

-

Japa's Miura and Kihara capture Skate America pairs gold

-

Who can qualify for 2026 World Cup in final round of European qualifiers

Who can qualify for 2026 World Cup in final round of European qualifiers

-

UK to cut protections for refugees under asylum 'overhaul'

-

England's Tuchel plays down records before final World Cup qualifier

England's Tuchel plays down records before final World Cup qualifier

-

Depoortere double helps France hold off spirited Fiji

-

Scotland face World Cup shootout against Denmark after Greece defeat

Scotland face World Cup shootout against Denmark after Greece defeat

-

Hansen hat-trick inspires Irish to record win over Australia

-

Alcaraz secures ATP Finals showdown with 'favourite' Sinner

Alcaraz secures ATP Finals showdown with 'favourite' Sinner

-

UK to cut protections for refugees under asylum 'overhaul': govt

-

Spain, Switzerland on World Cup brink as Belgium also made to wait

Spain, Switzerland on World Cup brink as Belgium also made to wait

-

Sweden's Grant leads by one at LPGA Annika tournament

-

Scotland cling to hopes of automatic World Cup qualification despite Greece defeat

Scotland cling to hopes of automatic World Cup qualification despite Greece defeat

-

Alcaraz secures ATP Finals showdown with great rival Sinner

-

England captain Itoje savours 'special' New Zealand win

England captain Itoje savours 'special' New Zealand win

-

Wales's Evans denies Japan historic win with last-gasp penalty

Is AI's meteoric rise beginning to slow?

A quietly growing belief in Silicon Valley could have immense implications: the breakthroughs from large AI models -– the ones expected to bring human-level artificial intelligence in the near future –- may be slowing down.

Since the frenzied launch of ChatGPT two years ago, AI believers have maintained that improvements in generative AI would accelerate exponentially as tech giants kept adding fuel to the fire in the form of data for training and computing muscle.

The reasoning was that delivering on the technology's promise was simply a matter of resources –- pour in enough computing power and data, and artificial general intelligence (AGI) would emerge, capable of matching or exceeding human-level performance.

Progress was advancing at such a rapid pace that leading industry figures, including Elon Musk, called for a moratorium on AI research.

Yet the major tech companies, including Musk's own, pressed forward, spending tens of billions of dollars to avoid falling behind.

OpenAI, ChatGPT's Microsoft-backed creator, recently raised $6.6 billion to fund further advances.

xAI, Musk's AI company, is in the process of raising $6 billion, according to CNBC, to buy 100,000 Nvidia chips, the cutting-edge electronic components that power the big models.

However, there appears to be problems on the road to AGI.

Industry insiders are beginning to acknowledge that large language models (LLMs) aren't scaling endlessly higher at breakneck speed when pumped with more power and data.

Despite the massive investments, performance improvements are showing signs of plateauing.

"Sky-high valuations of companies like OpenAI and Microsoft are largely based on the notion that LLMs will, with continued scaling, become artificial general intelligence," said AI expert and frequent critic Gary Marcus. "As I have always warned, that's just a fantasy."

- 'No wall' -

One fundamental challenge is the finite amount of language-based data available for AI training.

According to Scott Stevenson, CEO of AI legal tasks firm Spellbook, who works with OpenAI and other providers, relying on language data alone for scaling is destined to hit a wall.

"Some of the labs out there were way too focused on just feeding in more language, thinking it's just going to keep getting smarter," Stevenson explained.

Sasha Luccioni, researcher and AI lead at startup Hugging Face, argues a stall in progress was predictable given companies' focus on size rather than purpose in model development.

"The pursuit of AGI has always been unrealistic, and the 'bigger is better' approach to AI was bound to hit a limit eventually -- and I think this is what we're seeing here," she told AFP.

The AI industry contests these interpretations, maintaining that progress toward human-level AI is unpredictable.

"There is no wall," OpenAI CEO Sam Altman posted Thursday on X, without elaboration.

Anthropic's CEO Dario Amodei, whose company develops the Claude chatbot in partnership with Amazon, remains bullish: "If you just eyeball the rate at which these capabilities are increasing, it does make you think that we'll get there by 2026 or 2027."

- Time to think -

Nevertheless, OpenAI has delayed the release of the awaited successor to GPT-4, the model that powers ChatGPT, because its increase in capability is below expectations, according to sources quoted by The Information.

Now, the company is focusing on using its existing capabilities more efficiently.

This shift in strategy is reflected in their recent o1 model, designed to provide more accurate answers through improved reasoning rather than increased training data.

Stevenson said an OpenAI shift to teaching its model to "spend more time thinking rather than responding" has led to "radical improvements".

He likened the AI advent to the discovery of fire. Rather than tossing on more fuel in the form of data and computer power, it is time to harness the breakthrough for specific tasks.

Stanford University professor Walter De Brouwer likens advanced LLMs to students transitioning from high school to university: "The AI baby was a chatbot which did a lot of improv'" and was prone to mistakes, he noted.

"The homo sapiens approach of thinking before leaping is coming," he added.

E.AbuRizq--SF-PST